ON THE FIRST DAY OF AUTUMN, 1998, Florence Griffith Joyner, former Olympic track gold medalist, died in her sleep at the age of thirty-eight when her heart stopped beating. That same fall, Canadian ice hockey player Stéphane Morin, age twenty-nine, died of sudden heart failure during a hockey game in Germany, leaving behind a wife and newborn son. Chad Silver, who had played on the Swiss national ice hockey team, also age twenty-nine, died of a heart attack. Former Tampa Bay Buccaneers nose tackle Dave Logan collapsed and died from the same cause. He was forty-two.

None of these athletes had any history of heart disease.

A decade later, responding to mounting alarm among the sports community, the Minneapolis Heart Institute Foundation created a National Registry of Sudden Deaths in Athletes. After combing through public records, news reports, hospital archives, and autopsy records, the Foundation identified 1,049 American athletes in thirty-eight competitive sports who had suffered sudden cardiac arrest between 1980 and 2006.

The data confirmed what the sports community already knew. In 1980, heart attacks in young athletes were rare: only nine cases occurred in the United States. The number rose gradually but steadily, increasing about ten percent per year, until 1996, when the number of cases of fatal cardiac arrest among athletes suddenly doubled. There were 64 that year, and 66 the following year. In the last year of the study, 76 competitive athletes died when their hearts gave out, most of them under eighteen years of age.

The American medical community was at a loss to explain it. But in Europe, some physicians thought they knew the answer, not only to the question of why so many young athletes’ hearts could no longer stand the strain of exertion, but to the more general question of why so many young people were succumbing to diseases from which only old people used to die. On October 9, 2002, an association of German doctors specializing in environmental medicine began circulating a document calling for a moratorium on antennas and towers used for mobile phone communications.

Electromagnetic radiation, they said, was causing a drastic rise in both acute and chronic diseases, prominent among which were “extreme fluctuations in blood pressure,” “heart rhythm disorders,” and “heart attacks and strokes among an increasingly younger population.”

Three thousand physicians signed this document, named the Freiburger Appeal after the German city in which it was drafted. Their analysis, if correct, could explain the sudden doubling of heart attacks among American athletes in 1996: that was the year digital cell phones first went on sale in the United States, and the year cell phone companies began building tens of thousands of cell towers to make them work.

Marconi, the father of radio, had ten heart attacks after he began his world-changing work, including the one that killed him at the young age of 63.

“Anxiety disorder,” which is rampant today, is most often diagnosed from its cardiac symptoms.

Many suffering from an acute “anxiety attack” have heart palpitations, shortness of breath, and pain or pressure in the chest, which so often resemble an actual heart attack that hospital emergency rooms are visited by more patients who turn out to have nothing more than “anxiety” than by patients who prove to have something wrong with their hearts.

“Anxiety neurosis” was an invention of Sigmund Freud, a renaming of a disease formerly called neurasthenia, that became prevalent only in the late nineteenth century following the building of the first electrical communication systems.

Radio wave sickness, described by Russian doctors in the 1950s, includes cardiac disturbances as a prominent feature.

Heart disease appeared in the medical literature for the first time at the beginning of the twentieth century and American medical doctors were ultimately trained that cholesterol was the primary cause, with the medical establishment completely ignoring the knowledge that existed in Europe concerning the dangers of radio electromagnetic frequencies.

In 1996 the telecommunications industry promised a “wireless revolution.” The industry wanted to place a cell phone in the hands of every American, and in order to make those devices work in the urban cities and towns across the country, they were applying for permission to erect thousands of microwave antennas. Advertisements for the newfangled phones were beginning to appear on radio and television, telling the public why they needed such things and that they would make ideal Christmas gifts.

During this time the FCC released human exposure guidelines for microwave radiation. The new guidelines were written by the cell phone industry itself and did not protect people from any of the effects of microwave radiation except one: being cooked like a roast in a microwave oven. None of the known effects of such radiation, apart from heat—effects on the heart, nervous system, thyroid gland, and other organs—were taken into consideration.

Worse, Congress had passed a law that January that actually made it illegal for cities and states to regulate this new technology on the basis of health. President Clinton had signed it on February 8.

The industry, the FCC, Congress, and the President were conspiring to tell us that we should all feel comfortable holding devices that emit microwave radiation directly against our brains, and that we should all get used to living in close quarters with microwave towers, because they were coming to a street near you whether you liked it or not. A giant biological experiment had been launched, and we were all going to be unwitting guinea pigs.

Except that the outcome was already known. The research had been done, and the scientists who had done it were trying to tell us what the new technology was going to do to the brains of cell phone users, and to the hearts and nervous systems of people living in the vicinity of cell towers—which one day soon was going to be everybody.

Dr. Samuel Milham, Jr. was one of those researchers. He had not done any of the clinical or experimental research on individual humans or animals; such work had been done by others in previous decades. Milham is an epidemiologist, a scientist who proves that the results obtained by others in the laboratory actually happen to masses of people living in the real world. In his early studies he had shown that electricians, power line workers, telephone linesmen, aluminum workers, radio and TV repairmen, welders, and amateur radio operators—those whose work exposed them to electricity or electromagnetic radiation—died far more often than the general public from leukemia, lymphoma, and brain tumors. He knew that the new FCC standards were inadequate, and he made himself available as a consultant to those who were challenging them in court.

Samuel Milham, M.D., M.P.H

In recent years, Milham turned his skills to the examination of vital statistics from the 1930s and 1940s, when the Roosevelt administration made it a national priority to electrify every farm and rural community in America. What Milham discovered surprised even him. Not only cancer, he found, but also diabetes and heart disease seemed to be directly related to residential electrification.

Rural communities that had no electricity had little heart disease—until electric service began. In fact, in 1940, country folk in electrified regions of the country were suddenly dying of heart disease four to five times as frequently as those who still lived out of electricity’s reach. “It seems unbelievable that mortality differences of this magnitude could go unexplained for over 70 years after they were first reported,” wrote Milham. He speculated that early in the twentieth century nobody was looking for answers.

Dr. Milham wrote an article in 2010, followed by a short book, suggesting that the modern epidemics of heart disease, diabetes, and cancer are largely if not entirely caused by electricity. He included solid statistics to back up these assertions.

Paul Dudley White, a well-known cardiologist associated with Harvard Medical School, puzzled over the problem in 1938. In the second edition of his textbook, Heart Disease, he wrote in amazement that Austin Flint, a prominent physician practicing internal medicine in New York City during the last half of the nineteenth century, had not encountered a single case of angina pectoris (chest pain due to heart disease) for one period of five years. White was provoked by the tripling of heart disease rates in his home state of Massachusetts since he had begun practicing in 1911. “As a cause of death,” he wrote, “heart disease has assumed greater and greater proportions in this part of the world until now it leads all other causes, having far outstripped tuberculosis, pneumonia, and malignant disease.” In 1970, at the end of his career, White was still unable to say why this was so. All he could do was wonder at the fact that coronary heart disease—disease due to clogged coronary arteries, which is the most common type of heart disease today—had once been so rare that he had seen almost no cases in his first few years of practice. “Of the first 100 papers I published,” he wrote, “only two, at the end of the 100, were concerned with coronary heart disease.”

Heart disease had not, however, sprung full-blown from nothing at the turn of the twentieth century. It had been relatively uncommon but not unheard of. The vital statistics of the United States show that rates of heart disease had begun to rise long before White graduated from medical school.

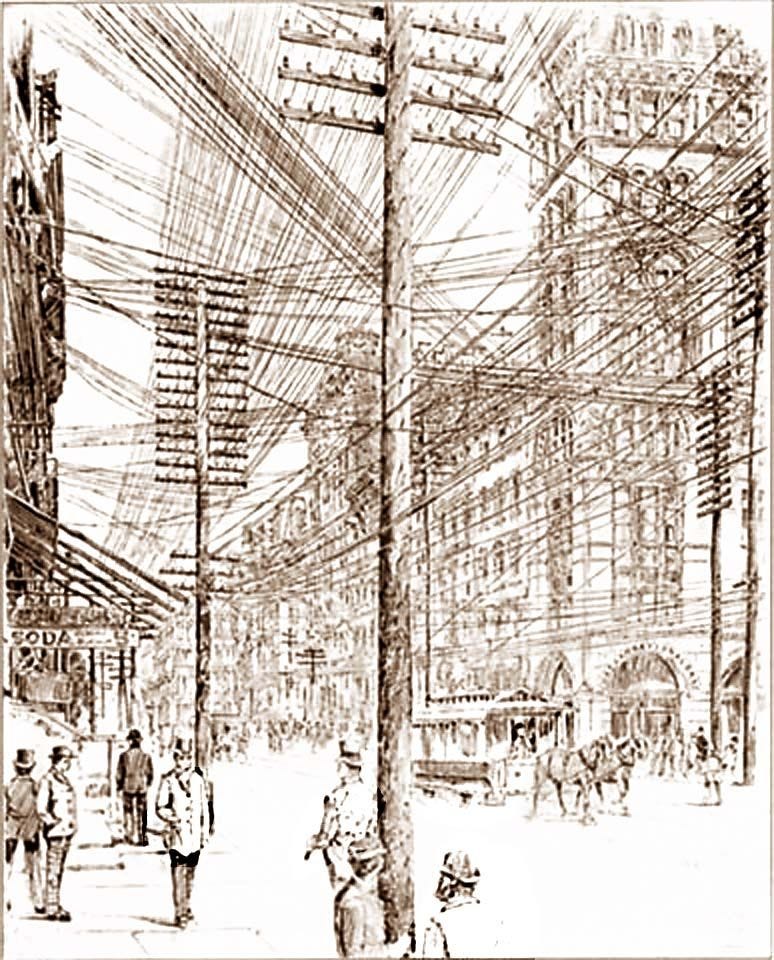

The modern epidemic actually began, quite suddenly, in the 1870s, at the same time as the first great proliferation of telegraph wires.

The evidence that heart disease is caused primarily by electricity is even more extensive than Milham suspected, and the mechanism by which electricity damages the heart is known.

We need not rely only on historical data for evidence supporting Milham’s proposal, for electrification has been going on in a few parts of the world.

From 1984 to 1987, scientists at the Sitaram Bhartia Institute of Science and Research decided to compare rates of coronary heart disease in Delhi, India, which were disturbingly high, with rates in rural areas of Gurgaon district in Haryana state 50 to 70 kilometers away. Twenty-seven thousand people were interviewed, and as expected, the researchers found more heart disease in the city than in the country. But they were surprised by the fact that virtually all of the supposed risk factors were actually greater in the rural districts.

City dwellers smoked much less. They consumed fewer calories, less cholesterol, and much less saturated fat than their rural counterparts. Yet they had five times as much heart disease. “It is clear from the present study,” wrote the researchers, “that the prevalence of coronary heart disease and its urban-rural differences are not related to any particular risk factor, and it is therefore necessary to look for other factors beyond the conventional explanations.” The most obvious factor that these researchers did not look at was electricity. For in the mid-1980s the Gurgaon district had not yet been electrified.

In order to make sense of these kinds of data it is necessary to review what is known—and what is still not known—about heart disease, electricity, and the relationship between the two.

A book titled The Cholesterol Myths was published in 2000 by Danish physician Uffe Ravnskov, a specialist in internal medicine and kidney disease and a retired family practice doctor living in Lund, Sweden.

Ravnskov’s book demolishes the idea that people are having more heart attacks today because they are stuffing themselves with more animal fat than their ancestors did. On its surface, his thesis is contrary to the standard medical theory about heart disease. The most important thing to keep in mind is that the early studies did not have the same outcome as research being done today, and that there is a reason for this difference. Even recent studies from different parts of the world do not always agree with each other, for the same reason.

Ravnskov, however, has become something of an icon among portions of the alternative health community, including many environmental physicians who are now prescribing high-fat diets—emphasizing animal fats—to their severely ill patients. They are misreading the medical literature.

The studies that Ravnskov relied on show unequivocally that some factor other than diet is responsible for the modern scourge of heart disease, but they also show that cutting down on dietary fat in today’s world helps to prevent the damage caused by that other factor. Virtually every large study done since the 1950s in the industrialized world has shown a direct correlation between cholesterol and heart disease. And every study comparing vegetarians to meat eaters has found that vegetarians today have both lower cholesterol levels and a reduced risk of dying from a heart attack.

Ravnskov speculated that this is because people who eat no meat are also more health-conscious in other ways. But the same results have been found in people who are vegetarians only for religious reasons. Seventh Day Adventists all abstain from tobacco and alcohol, but only about half abstain from meat. A number of large long-term studies have shown that Adventists who are also vegetarians are two to three times less likely to die from heart disease.

The very early studies—those done in the first half of the twentieth century—did not give these kinds of results and did not show that cholesterol was related to heart disease. To most researchers, this has been an insoluble paradox, contradicting present ideas about diet, and has been a reason for the mainstream medical community to dismiss the early research.

Before the 1860s cholesterol did not cause coronary heart disease, and there is other evidence that this is so.

In 1965, Leon Michaels, working at the University of Manitoba, decided to see what historical documents revealed about fat consumption in previous centuries when coronary heart disease was extremely rare. What he found also contradicted current wisdom and convinced him that there must be something wrong with the cholesterol theory. One author in 1696 had calculated that the wealthier half of the English population, or about 2.7 million people, ate an amount of flesh yearly averaging 147.5 pounds per person—more than the national average for meat consumption in England in 1962. Nor did the consumption of animal fats decline at any time before the twentieth century. Another calculation made in 1901 had shown that the servant-keeping class of England consumed, on average, a much larger amount of fat in 1900 than they did in 1950. Michaels did not think that lack of exercise could explain the modern epidemic of heart disease either, because it was among the idle upper classes, who had never engaged in manual labor, and who were eating much less fat than they used to, that heart disease had increased the most.

Then there was the incisive work of Jeremiah Morris, Professor of Social Medicine at the University of London, who observed that in the first half of the twentieth century, coronary heart disease had increased while coronary atheroma—cholesterol plaques in the coronary arteries—had actually decreased. Morris examined the autopsy records at London Hospital from the years 1908 through 1949. In 1908, 30.4 percent of all autopsies in men aged thirty to seventy showed advanced atheroma; in 1949, only 16 percent. In women the rate had fallen from 25.9 percent to 7.5 percent.

In other words, cholesterol plaques in coronary arteries were far less common than before, but they were contributing to more disease, more angina, and more heart attacks. By 1961, when Morris presented a paper about the subject at Yale University Medical School, studies conducted in Framingham, Massachusetts and Albany, New York had established a connection between cholesterol and heart disease. Morris was sure that some other, unknown environmental factor was also important. “It is tolerably certain,” he told his audience, “that more than fats in the diet affect blood lipid levels, more than blood lipid levels are involved in atheroma formation, and more than atheroma is needed for ischemic heart disease.”

That factor, as we will see, is electricity.

Electromagnetic fields have become so intense in our environment that we are unable to metabolize fats the way our ancestors could.

The element that increased most spectacularly in the environment during the 1950s when coronary disease was exploding among humans was radio frequency (RF) radiation. Before World War II, radio waves had been widely used for only two purposes: radio communication, and diathermy, which is their therapeutic use in medicine to heat parts of the body.

Suddenly the demand for RF generating equipment was unquenchable. While the use of the telegraph in the Civil War had stimulated its commercial development, and the use of radio in World War I had done the same for that technology, the use of radar in World War II spawned scores of new industries. RF oscillators were being mass produced for the first time, and hundreds of thousands of people were being exposed to radio waves on the job—radio waves that were now used not only in radar, but in navigation; radio and television broad-casting; radio astronomy; heating, sealing and welding in dozens of industries; and “radar ranges” for the home. Not only industrial workers, but the entire population, were being exposed to unprecedented levels of RF radiation.

For reasons having more to do with politics than science, history took opposite tracks on opposite sides of the world. In Western Bloc countries, science went deeper into denial. It had buried its head, ostrich-like, in the year 1800. When radar technicians complained of headaches, fatigue, chest discomfort, and eye pain, and even sterility and hair loss, they were sent for a quick medical exam and some blood work. When nothing dramatic turned up, they were ordered back to work. The attitude of Charles I. Barron, medical director of the California division of Lockheed Aircraft Corporation, was typical. Reports of illness from microwave radiation “had all too often found their way into lay publications and newspapers,” he said in 1955. He was addressing representatives of the medical profession, the armed forces, various academic institutions, and the airline industry at a meeting in Washington, DC. “Unfortunately,” he added, “the publication of this information within the past several years coincided with the development of our most powerful airborne radar transmitters, and considerable apprehension and misunderstanding has arisen among engineering and radar test personnel.” He told his audience that he had examined hundreds of Lockheed employees and found no difference between the health of those exposed to radar and those not exposed. However, his study, which was subsequently published in the Journal of Aviation Medicine, was tainted by the same see-no-evil attitude. His “unexposed” control population were actually Lockheed workers who were exposed to radar intensities of less than 3.9 milliwatts per square centimeter—a level that is almost four times the legal limit for exposure of the general public in the United States today. Twenty-eight percent of these “unexposed” employees suffered from neurological or cardiovascular disorders, or from jaundice, migraines, bleeding, anemia, or arthritis. And when Barron took repeated blood samples from his “exposed” population—those who were exposed to more than 3.9 milliwatts per square centimeter—the majority had a significant drop in their red cell count over time, and a significant increase in their white cell count. Barron dismissed these findings as “laboratory errors.”

The Eastern Bloc experience was different. Workers’ complaints were considered important.

Clinics dedicated entirely to the diagnosis and treatment of workers exposed to microwave radiation were established in Moscow, Leningrad, Kiev, Warsaw, Prague, and other cities. On average, about fifteen percent of workers in these industries became sick enough to seek medical treatment, and two percent became permanently disabled.

The Soviets and their allies recognized that the symptoms caused by microwave radiation were the same as those first described in 1869 by American physician George Beard. Therefore, using Beard’s terminology, they called the symptoms “neurasthenia,” while the disease that caused them was named “microwave sickness” or “radio wave sickness.”

Intensive research began at the Institute of Labor Hygiene and Occupational Diseases in Moscow in 1953. By the 1970s, the fruits of such investigations had produced thousands of publications. Medical textbooks on radio wave sickness were written, and the subject entered the curriculum of Russian and Eastern European medical schools. Today, Russian textbooks describe effects on the heart, nervous system, thyroid, adrenals, and other organs. Symptoms of radio wave exposure include headache, fatigue, weakness, dizziness, nausea, sleep disturbances, irritability, memory loss, emotional instability, depression, anxiety, sexual dysfunction, impaired appetite, abdominal pain, and digestive disturbances. Patients have visible tremors, cold hands and feet, flushed face, hyperactive reflexes, abundant perspiration, and brittle fingernails. Blood tests reveal disturbed carbohydrate metabolism and elevated triglycerides and cholesterol.

Cardiac symptoms are prominent. They include heart palpitations, heaviness and stabbing pains in the chest, and shortness of breath after exertion. The blood pressure and pulse rate become unstable. Acute exposure usually causes rapid heartbeat and high blood pressure, while chronic exposure causes the opposite: low blood pressure and a heartbeat that can be as slow as 35 to 40 beats per minute. The first heart sound is dulled, the heart is enlarged on the left side, and a murmur is heard over the apex of the heart, often accompanied by premature beats and an irregular rhythm.

The electrocardiogram may reveal a blockage of electrical conduction within the heart, and a condition known as left axis deviation. Signs of oxygen deprivation to the heart muscle—a flattened or inverted T wave, and depression of the ST interval—are extremely frequent. Congestive heart failure is sometimes the ultimate outcome. In one medical textbook published in 1971, the author, Nikolay Tyagin, stated that in his experience only about fifteen percent of workers exposed to radio waves had normal EKGs.

Although this knowledge has been completely ignored by the American Medical Association and is not taught in any American medical school, it has not gone unnoticed by some American researchers.

Trained as a biologist, Allan H. Frey became interested in microwave research in 1960 by following his curiosity.

Allan H. Frey (1912-1967)

Employed at the General Electric Company’s Advanced Electronics Center at Cornell University, he was already exploring how electrostatic fields affect an animal’s nervous system, and he was experimenting with the biological effects of air ions. Late that year, while attending a conference, he met a technician from GE’s radar test facility at Syracuse, who told Frey that he could hear radar. “He was rather surprised,” Frey later recalled, “when I asked if he would take me to a site and let me hear the radar. It seemed that I was the first person he had told about hearing radars who did not dismiss his statement out of hand.” The man took Frey to his work site near the radar dome at Syracuse. “And when I walked around there and climbed up to stand at the edge of the pulsating beam, I could hear it, too,” Frey remembers. “I could hear the radar going zip-zip-zip.”

This chance meeting determined the future course of Frey’s career. He left his job at General Electric and began doing full-time research into the biological effects of microwave radiation. In 1961, he published his first paper on “microwave hearing,” a phenomenon that is now fully recognized although still not fully explained. He spent the next two decades experimenting on animals to determine the effects of microwaves on behavior, and to clarify their effects on the auditory system, the eyes, the brain, the nervous system, and the heart. He discovered the blood-brain barrier effect, an alarming damage to the protective shield that keeps bacteria, viruses, and toxic chemicals out of the brain—damage that occurs at levels of radiation that are much lower than what is emitted by cell phones today. He proved that nerves, when firing, emit pulses of radiation themselves, in the infrared spectrum. All of Frey’s pioneering work was funded by the Office of Naval Research and the United States Army.

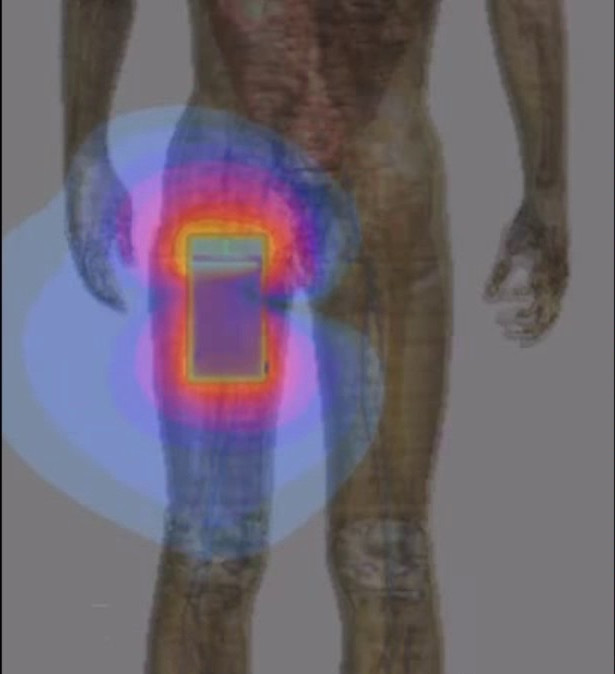

When scientists in the Soviet Union began reporting that they could modify the rhythm of the heart at will with microwave radiation, Frey took a special interest. N. A. Levitina, in Moscow, had found that she could either speed up an animal’s heart rate or slow it down, depending on which part of the animal’s body she irradiated. Irradiating the back of an animal’s head quickened its heart rate, while irradiating the back of its body, or its stomach, slowed it down.

Frey, in his laboratory in Pennsylvania, decided to take this research one step farther. Based on the Russian results and his knowledge of physiology he predicted that if he used brief pulses of microwave radiation, synchronized with the heartbeat and timed to coincide precisely with the beginning of each beat, he would cause the heart to speed up, and might disrupt its rhythm.

It worked like magic. He first tried the experiment on the isolated hearts of 22 different frogs.

The heart rate increased every time. In half the hearts, arrhythmias occurred, and in some of the experiments the heart stopped. The pulse of radiation was most damaging when it occurred exactly one-fifth of a second after the beginning of each beat. The average power density was only six-tenths of a microwatt per square centimeter—roughly ten thousand times weaker than the radiation that a person’s heart would absorb today if he or she kept a cell phone in a shirt pocket while making a call.

And let’s not forget about the pants pocket.

Frey conducted the experiments with isolated hearts in 1967. Two years later, he tried the same thing on 24 live frogs, with similar though less dramatic results. No arrhythmias or cardiac arrests occurred, but when the pulses of radiation coincided with the beginning of each beat, the heart speeded up significantly.

The effects Frey demonstrated occur because the heart is an electrical organ and microwave pulses interfere with the heart’s pacemaker. But in addition to these direct effects, there is a more basic problem: microwave radiation, and electricity in general, starves the heart of oxygen because of effects at the cellular level. These cellular effects were discovered, oddly enough, by a team that included Paul Dudley White. In the 1940s and 1950s, while the Soviets were beginning to describe how radio waves cause neurasthenia in workers, the United States military was investigating the same disease in military recruits.

The job that was assigned to Dr. Mandel Cohen and his associates in 1941 was to determine why so many soldiers fighting in the Second World War were reporting sick because of heart symptoms.

Mandel Ettelson Cohen (1907-2000)

Although their research spawned a number of shorter articles in medical journals, the main body of their work was a 150-page report that has been long forgotten. It was written for the Committee of Medical Research of the Office of Scientific Research and Development—the office that was created by President Roosevelt to coordinate scientific and medical research related to the war effort.

Unlike their predecessors since the time of Sigmund Freud, this medical team not only took these anxiety-like complaints seriously, but looked for and found physical abnormalities in the majority of these patients. They preferred to call the illness “neurocirculatory asthenia,” rather than “neurasthenia,” “irritable heart,” “effort syndrome,” or “anxiety neurosis,” as it had variously been known since the 1860s. Although the focus of this team was the heart, the 144 soldiers enrolled in their study also had respiratory, neurological, muscular, and digestive symptoms. Their average patient, in addition to having heart palpitations, chest pains, and shortness of breath, was nervous, irritable, shaky, weak, depressed, and exhausted. He could not concentrate, was losing weight, and was troubled by insomnia. He complained of headaches, dizziness, and nausea, and sometimes suffered from diarrhea or vomiting. Yet standard laboratory tests—blood work, urinalysis, X-rays, electrocardiogram, and electroencephalogram—were usually “within normal limits.”

Paul White, one of the two chief investigators—the other was neurologist Stanley Cobb—was already familiar with neurocirculatory asthenia from his civilian cardiology practice, and thought, contrary to Freud, that it was a genuine physical disease. Under the leadership of these three individuals, the team confirmed that this was indeed the case. Using the techniques that were available in the 1940s, they accomplished what no one in the nineteenth century, when the epidemic began, had been able to do: they demonstrated conclusively that neurasthenia had a physical and not a psychological cause. And they gave the medical community a list of objective signs by which the illness could be diagnosed.

Most patients had a rapid resting heart rate (over 90 beats per minute) and a rapid respiratory rate (over 20 breaths per minute), as well as a tremor of the fingers and hyperactive knee and ankle reflexes. Most had cold hands, and half the patients had a visibly flushed face and neck.

It has long been known that people with disorders of circulation have abnormal capillaries that can be most easily seen in the nail fold—the fold of skin at the base of the fingernails. White’s team routinely found such abnormal capillaries in their patients with neurocirculatory asthenia.

They found that these patients were hypersensitive to heat, pain and, significantly, to electricity—they reflexively pulled their hands away from electric shocks of much lower intensity than did normal healthy individuals.

When asked to run on an inclined treadmill for three minutes, the majority of these patients could not do it. On average, they lasted only a minute and a half. Their heart rate after such exercise was excessively fast, their oxygen consumption during the exercise was abnormally low and, most significantly, their ventilatory efficiency was abnormally low. This means that they used less oxygen, and exhaled less carbon dioxide, than a normal person even when they breathed the same amount of air.

To compensate, they breathed more air more rapidly than a healthy person and were still not able to continue running because their bodies were still not using enough oxygen.

A fifteen-minute walk on the same treadmill gave similar results. All subjects were able to complete this easier task. However, on average, the patients with neurocirculatory asthenia breathed fifteen percent more air per minute than healthy volunteers in order to consume the same amount of oxygen. And although, by breathing faster, the patients with neurocirculatory asthenia managed to consume the same amount of oxygen as the healthy volunteers, they had twice as much lactic acid in their blood, indicating that their cells were not using that oxygen efficiently.

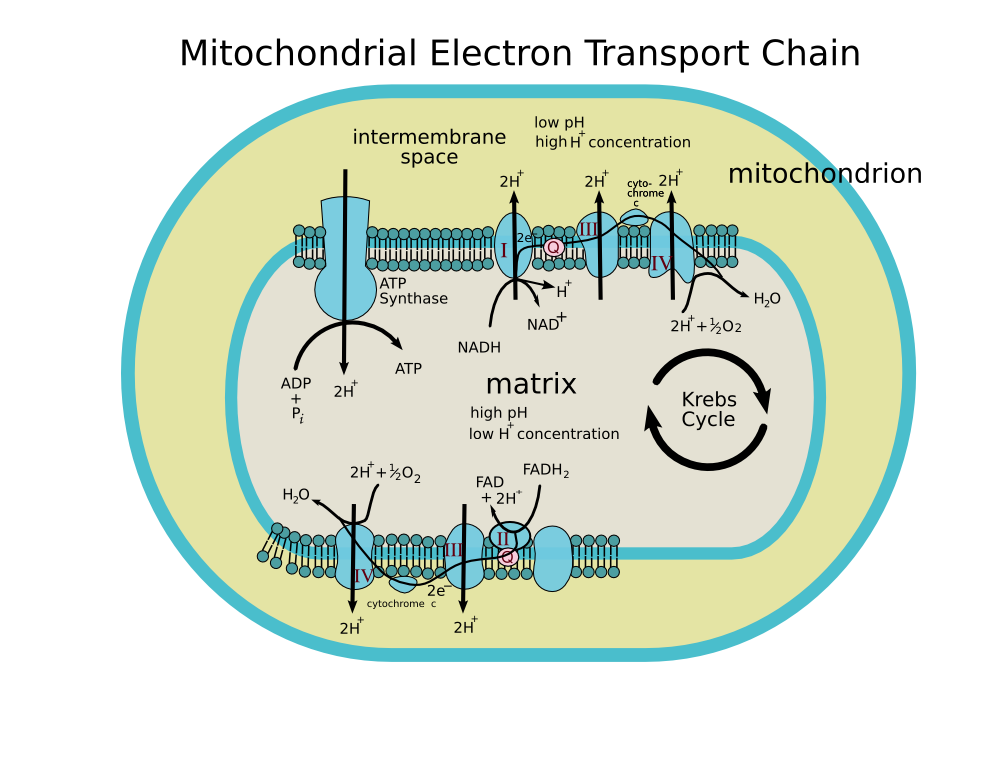

Compared to healthy individuals, people with this disorder were able to extract less oxygen from the same amount of air, and their cells were able to extract less energy from the same amount of oxygen. The researchers concluded that these patients suffered from a defect of aerobic metabolism.

In other words, something was wrong with their mitochondria—the powerhouses of their cells. The patients correctly complained that they could not get enough air. This was starving all of their organs of oxygen and causing both their heart symptoms and their other disabling complaints.

Patients with neurocirculatory asthenia were consequently unable to hold their breath for anything like a normal period of time, even when breathing oxygen.

During the five years of Cohen’s team’s study, several types of treatment were attempted with different groups of patients: oral testosterone; massive doses of vitamin B complex; thiamine; cytochrome c; psychotherapy; and a course of physical training under a professional trainer. None of these programs produced any improvement in symptoms or endurance.

“We conclude,” wrote the team in June 1946, “that neurocirculatory asthenia is a condition that actually exists and has not been invented by patients or medical observers. It is not malingering or simply a mechanism aroused during war time for purposes of evading military service. The disorder is quite common both as a civilian and as a service problem.” They objected to Freud’s term “anxiety neurosis” because anxiety was obviously a result, and not a cause, of the profound physical effects of not being able to get enough air.

In fact, these researchers virtually disproved the theory that the disease was caused by “stress” or “anxiety.” It was not caused by hyperventilation. Their patients did not have elevated levels of stress hormones—17-ketosteroids—in their urine. A twenty-year follow-up study of civilians with neurocirculatory asthenia revealed that these people typically did not develop any of the diseases that are supposed to be caused by anxiety, such as high blood pressure, peptic ulcer, asthma, or ulcerative colitis. However, they did have abnormal electrocardiograms that indicated that the heart muscle was being starved of oxygen, and that were sometimes indistinguishable from the EKGs of people who had actual coronary artery disease or actual structural damage to the heart.

The connection to electricity was provided by the Soviets. Soviet researchers, during the 1950s, 1960s, and 1970s, described physical signs and symptoms and EKG changes, caused by radio waves, that were identical to those that White and others had first reported in the 1930s and 1940s.

The EKG changes indicated both conduction blocks and oxygen deprivation to the heart. The Soviet scientists—in agreement with Cohen and White’s team—concluded that these patients were suffering from a defect of aerobic metabolism. Something was wrong with the mitochondria in their cells. And they discovered what that defect was. Scientists that included Yury Dumanskiy, Mikhail Shandala, and Lyudmila Tomashevskaya, working in Kiev, and F. A. Kolodub, N. P. Zalyubovskaya and R. I. Kiselev, working in Kharkov, proved that the activity of the electron transport chain—the mitochondrial enzymes that extract energy from our food—is diminished not only in animals that are exposed to radio waves, but in animals exposed to magnetic fields from ordinary electric power lines.

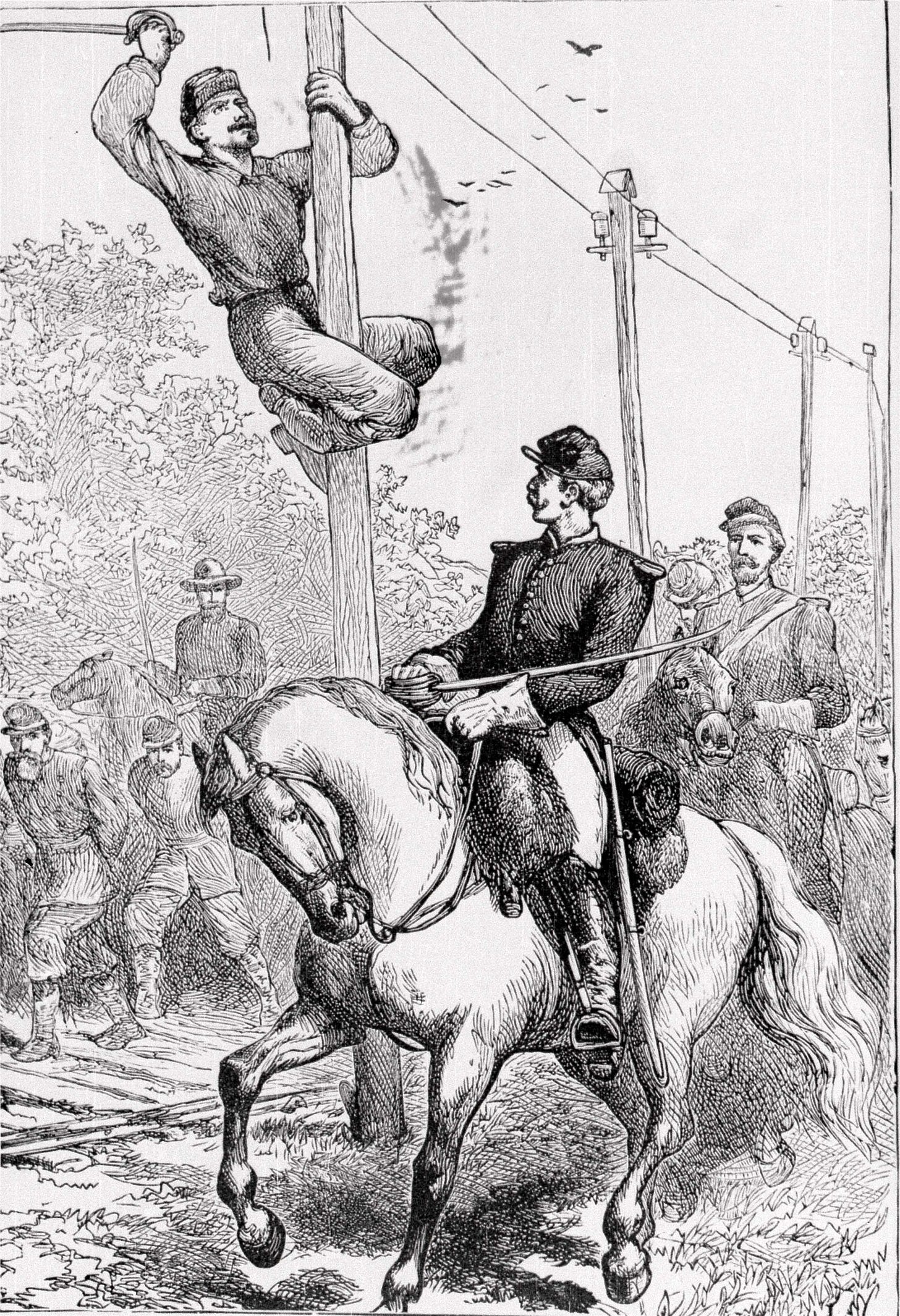

The first war in which the electric telegraph was widely used—the American Civil War—was also the first in which “irritable heart” was a prominent disease. A young physician named Jacob M. Da Costa, visiting physician at a military hospital in Philadelphia, described the typical patient.

“A man who had been for some months or longer in active service,” he wrote, “would be seized with diarrhea, annoying, yet not severe enough to keep him out of the field; or, attacked with diarrhea or fever, he rejoined, after a short stay in hospital, his command, and again underwent the exertions of a soldier’s life. He soon noticed that he could not bear them as formerly; he got out of breath, could not keep up with his comrades, was annoyed with dizziness and palpitation, and with pain in his chest; his accoutrements oppressed him, and all this though he appeared well and healthy. Seeking advice from the surgeon of the regiment, it was decided that he was unfit for duty, and he was sent to a hospital, where his persistently quick acting heart confirmed his story, though he looked like a man in sound condition.”

Exposure to electricity in this war was universal. When the Civil War broke out in 1861, the east and west coasts had not yet been linked, and most of the country west of the Mississippi was not yet served by any telegraph lines. But in this war, every soldier, at least on the Union side, marched and camped near such lines. From the attack on Fort Sumter on April 12, 1861, until General Lee’s surrender at Appomattux, the United States Military Telegraph Corps rolled out 15,389 miles of telegraph lines on the heels of the marching troops, so that military commanders in Washington could communicate instantly with all of the troops at their encampments. After the war all of these temporary lines were dismantled and disposed of.

“Hardly a day intervened when General Grant did not know the exact state of facts with me, more than 1,500 miles off as the wires ran,” wrote General Sherman in 1864. “On the field a thin insulated wire may be run on improvised stakes, or from tree to tree, for six or more miles in a couple of hours, and I have seen operators so skillful that by cutting the wire they would receive a message from a distant station with their tongues.”

Because the distinctive symptoms of irritable heart were encountered in every army of the United States, and attracted the attention of so many of its medical officers, Da Costa was puzzled that no one had described such a disease in any previous war. But telegraphic communications were never before used to such an extent in war. In the British Blue Book of the Crimean War, a conflict which lasted from 1853-56, Da Costa found two references to some troops being admitted to hospitals for “palpitations,” and he found possible hints of the same problem reported from India during the Indian Rebellion of 1857-58. These were also the only two conflicts prior to the American Civil War in which some telegraph lines were erected to connect command headquarters with troop units. Da Costa wrote that he searched through medical documents from many previous conflicts and did not find even a hint of such a disease prior to the Crimean War.

During the next several decades, irritable heart attracted relatively little interest. It was reported among British troops in India and South Africa, and occasionally among soldiers of other nations.

But the number of cases was small.

But shortly after the First World War broke out, in a time when heart disease was still rare in the general population and cardiology did not even exist as a separate medical specialty, soldiers began reporting sick with chest pain and shortness of breath, not by the hundreds, but by the tens of thousands. Out of the six and a half million young men that fought in the British Army and Navy, over one hundred thousand were discharged and pensioned with a diagnosis of “heart disease.”

Most of these men had irritable heart, also called “Da Costa’s syndrome,” or “effort syndrome.” In the United States Army such cases were all listed under “Valvular Disorders of the Heart,” and were the third most common medical cause for discharge from the Army. The same disease also occurred in the Air Force, but was almost always diagnosed as “flying sickness,” thought to be cause by repeated exposure to reduced oxygen pressure at high altitudes.

Similar reports came from Germany, Austria, Italy, and France.

So enormous was the problem that the United States Surgeon-General ordered four million soldiers training in the Army camps to be given cardiac examinations before being sent overseas.

Effort syndrome was “far away the commonest disorder encountered and transcended in interest and importance all the other heart affections combined,” said one of the examining physicians, Lewis A. Conner.

Some soldiers in this war developed effort syndrome after shell shock, or exposure to poison gas. Many more had no such history. All, however, had gone into battle using a newfangled form of communication.

The United Kingdom declared war on Germany on August 4, 1914, two days after Germany invaded its ally, France. The British army began embarking for France on August 9, and continued on to Belgium, reaching the city of Mons on August 22, without the aid of the wireless telegraph.

While in Mons, a 1500-watt mobile radio set, having a range of 60 to 80 miles, was supplied to the British army signal troops. It was during the retreat from Mons that many British soldiers first became ill with chest pain, shortness of breath, palpitations, and rapid heart beat and were sent back to England to be evaluated for possible heart disease.

Exposure to radio was universal and intense. A knapsack radio with a range of five miles was used by the British army in all trench warfare on the front lines. Every battalion carried two such sets, each having two operators, in the front line with the infantry. One or two hundred yards behind, back with the reserve, were two more sets and two more operators. A mile further behind at Brigade Headquarters was a larger radio set, two miles back at Divisional Headquarters was a 500-watt set, and six miles behind the front lines at Army Headquarters was a 1500-watt radio wagon with a 120-foot steel mast and an umbrella-type aerial. Each operator relayed the telegraph messages received from in front of or behind him.

All cavalry divisions and brigades were assigned radio wagons and knapsack sets. Cavalry scouts carried special sets right on their horses, that were called “whisker wireless” because of the antennae that sprouted from the horses’ flanks like the quills of a porcupine.

Most aircraft carried lightweight radio sets, using the metal frame of the airplane as the antenna.

German war Zeppelins and French dirigibles carried much more powerful sets, and Japan had wireless sets in its war balloons. Radio sets on ships made it possible for naval battle lines to be spread out in formations 200 or 300 miles long. Even submarines, while cruising below the surface, sent up a short mast, or an insulated jet of water, as an antenna for the coded radio messages they broadcast and received.

In the Second World War irritable heart, now called neurocirculatory asthenia, returned with a vengeance. Radar joined radio for the first time in this war, and it too was universal and intense.

Like children with a new toy, every nation devised as many uses for it as possible. Britain, for example, peppered its coastline with hundreds of early warning radars emitting more than half a million watts each, and outfitted all its airplanes with powerful radars that could detect objects as small as a submarine periscope. More than two thousand portable radars, accompanied by 105-foot-tall portable towers, were deployed by the British army. Two thousand more “gun-laying” radars assisted anti-aircraft guns in tracking and shooting down enemy aircraft. The ships of the Royal Navy sported surface radars with a power of up to one million watts, as well as air search radars, and microwave radars that detected submarines and were used for navigation.

Gun Laying Radar

The Americans deployed five hundred early-warning radars on board ships, and additional early-warning radars on aircraft, each having a power of one million watts. They used portable radar sets at beachheads and airfields in the South Pacific, and thousands of microwave radars on ships, aircraft, and Navy blimps. From 1941 to 1945 the Radiation Laboratory at the Massachusetts Institute of Technology was kept busy by its military masters developing some one hundred different types of radar for various uses in the war.

It was during this war that the first rigorous program of medical research was conducted on soldiers with this disease. By this time Freud’s proposed term “anxiety neurosis” had taken firm hold among army doctors. Members of the Air Force who had heart symptoms were now receiving a diagnosis of “L.M.F.,” standing for “lack of moral fiber.” Cohen’s team was stacked with psychiatrists. But to their surprise, and guided by cardiologist Paul White, they found objective evidence of a real disease that they concluded was not caused by anxiety.

Largely because of the prestige of this team, research into neurocirculatory asthenia continued in the United States throughout the 1950s; in Sweden, Finland, Portugal, and France into the 1970s and 1980s; and even, in Israel and Italy, into the 1990s. But a growing stigma was attached to any doctor who still believed in the physical causation of this disease. Although the dominance of the Freudians had waned, they left an indelible mark not only on psychiatry but on all of medicine.

Today, in the West, only the “anxiety” label remains, and people with the symptoms of neurocirculatory asthenia are automatically given a psychiatric diagnosis and, very likely, a paper bag to breathe into. Ironically, Freud himself, although he coined the term “anxiety neurosis,” thought that its symptoms were not mentally caused, “nor amenable to psychotherapy.”

Meanwhile, an unending stream of patients continued to appear in doctors’ offices suffering from unexplained exhaustion, often accompanied by chest pain and shortness of breath, and a few courageous doctors stubbornly continued to insist that psychiatric problems could not explain them all. In 1988, the term “chronic fatigue syndrome” (CFS) was coined by Gary Holmes at the Centers for Disease Control, and it continues to be applied by some doctors to patients whose most prominent symptom is exhaustion. Those doctors are still very much in the minority. Based on their reports, the CDC estimates that the prevalence of CFS is between 0.2 percent and 2.5 percent of the population, while their counterparts in the psychiatric community tell us that as many as one person in six, suffering from the identical symptoms, fits the criteria for “anxiety disorder” or “depression.”

To confuse the matter still further, the same set of symptoms was called myalgic encephalomyelitis (ME) in England as early as 1956, a name that focused attention on muscle pains and neurological symptoms rather than fatigue. Finally, in 2011, doctors from thirteen countries got together and adopted a set of “International Consensus Criteria” that recommends abandoning the name “chronic fatigue syndrome” and applying “myalgic encephalomyelitis” to all patients who suffer from “post-exertional exhaustion” plus specific neurological, cardiovascular, respiratory, immune, gastrointestinal, and other impairments.

This international “consensus” effort, however, is doomed to failure. It completely ignores the psychiatric community, which sees far more of these patients. And it pretends that the schism that emerged from World War II never occurred. In the former Soviet Union, Eastern Europe, and most of Asia, the older term “neurasthenia” persists today. That term is still widely applied to the full spectrum of symptoms described by George Beard in 1869, including headache and backache; irritability and insomnia; general malaise; excitation or increase of pain; over-excitation of the pulse; chills, as though the patient were catching a cold; soreness, stiffness, and dull aching;profuse perspiration; numbness; muscle spasms; light or sound sensitivity; metallic taste; and ringing in the ears. In those parts of the world it is generally recognized that exposure to toxic agents, both chemical and electromagnetic, often causes this disease.

According to published literature, all of these diseases—neurocirculatory asthenia, radio wave sickness, anxiety disorder, chronic fatigue syndrome, and myalgic encephalomyelitis—predispose to elevated levels of blood cholesterol, and all carry an increased risk of death from heart disease. So do porphyria and oxygen deprivation. The fundamental defect in this disease of many names is that although enough oxygen and nutrients reach the cells, the mitochondria—the powerhouses of the cells—cannot efficiently use that oxygen and those nutrients, and not enough energy is produced to satisfy the requirements of heart, brain, muscles, and organs. This effectively starves the entire body, including the heart, of oxygen, and can eventually damage the heart. In addition, neither sugars nor fats are efficiently utilized by the cells, causing unutilized sugar to build up in the blood—leading to diabetes—as well as unutilized fats to be deposited in arteries.

And we have a good idea of precisely where the defect is located. People with this disease have reduced activity of a porphyrin-containing enzyme called cytochrome oxidase, which resides within the mitochondria, and delivers electrons from the food we eat to the oxygen we breathe. Its activity is impaired in all the incarnations of this disease. Mitochondrial dysfunction has been reported in chronic fatigue syndrome and in anxiety disorder. Muscle biopsies in these patients show reduced cytochrome oxidase activity. Impaired glucose metabolism is well known in radio wave sickness, as is an impairment of cytochrome oxidase activity in animals exposed to even extremely low levels of radio waves. And the neurological and cardiac symptoms of porphyria are widely blamed on a deficiency of cytochrome oxidase and cytochrome c, the heme-containing enzymes of respiration.

In the twentieth century, particularly after World War II, a barrage of toxic chemicals and electromagnetic fields (EMFs) began to significantly interfere with the breathing of our cells. We know from work at Columbia University that even tiny electric fields alter the speed of electron transport from cytochrome oxidase. Researchers Martin Blank and Reba Goodman thought that the explanation lay in the most basic of physical principles. “EMF,” they wrote in 2009, “acts as a force that competes with the chemical forces in a reaction.” Scientists at the Environmental Protection Agency—John Allis and William Joines—finding a similar effect from radio waves, developed a variant theory along the same lines. They speculated that the iron atoms in the porphyrin-containing enzymes were set into motion by the oscillating electric fields, interfering with their ability to transport electrons.

It was the English physiologist John Scott Haldane who first suggested, in his classic book, Respiration, that “soldier’s heart” was caused not by anxiety but by a chronic lack of oxygen.

Mandel Cohen later proved that the defect was not in the lungs, but in the cells. These patients continually gulped air not because they were neurotic, but because they really could not get enough of it. You might as well have put them in an atmosphere that contained only 15 percent oxygen instead of 21 percent, or transported them to an altitude of 15,000 feet. Their chests hurt, and their hearts beat fast, not because of panic, but because they craved air. And their hearts craved oxygen, not because their coronary arteries were blocked, but because their cells could not fully utilize the air they were breathing.

These patients were not psychiatric cases; they were warnings for the world. For the same thing was also happening to the civilian population: they too were being slowly asphyxiated, and the pandemic of heart disease that was well underway in the 1950s was one result. Even in people who did not have a porphyrin enzyme deficiency, the mitochondria in their cells were still struggling, to some smaller degree, to metabolize carbohydrates, fats, and proteins. Unburned fats, together with the cholesterol that transported those fats in the blood, were being deposited on the walls of arteries.

Humans and animals were not able to push their hearts quite as far as before without showing signs of stress and disease. This takes its clearest toll on the body when it is pushed to its limits, for example in athletes, and in soldiers during war.

The real story of the connection between electricity and heart disease is told by the astonishing statistics.

The results, for 1931 and 1940, are pictured below. Not only is there a five to sixfold difference in mortality from rural heart disease between the most and least electrified states, but all of the data points come very close to lying on the same line. The more a state was electrified—that is. the more rural households had electricity—the more rural heart disease it had. The amount of rural heart disease was proportional to the number of households that had electricity.

Rate of Rural Heart Disease related to Percent of Electrification in 1931:

Rate of Rural Heart Disease related to Percent Of Electrification in 1940:

What is even more remarkable is that the death rates from heart disease in unelectrified rural areas of the United States in 1931, before the Rural Electrification Program got into gear, were still as low as the death rates for the whole United States prior to the beginning of the heart disease epidemic in the nineteenth century.

In 1850, the first census year in which mortality data were collected, a total of 2,527 deaths from heart disease were recorded in the nation. Heart disease ranked twenty-fifth among causes of death in that year. About as many people died from accidental drowning as from heart disease. Heart disease was something that occurred mainly in young children and in old age, and was predominantly a rural rather than an urban disease because farmers lived longer than city-dwellers.

Heart disease rose steadily with electrification, as we saw above, and reached a peak when rural electrification approached 100 percent during the 1950s. Rates of heart disease then leveled off for three decades and began to drop again—or so it seems at first glance. A closer look, however, shows the true picture. These are just the mortality rates. The number of people walking around with heart disease—the prevalence rate—actually continued to rise, and is still rising today.

Mortality stopped rising in the 1950s because of the introduction of anticoagulants like heparin, and later aspirin, both to treat heart attacks and to prevent them. In the succeeding decades the ever more aggressive use of anticoagulants, drugs to lower blood pressure, cardiac bypass surgery, balloon angioplasty, coronary stents, pacemakers, and even heart transplants, has simply allowed an ever growing number of people with heart disease to stay alive. But people are not having fewer heart attacks. They are having more.

The Framingham Heart Study showed that at any given age the chance of having a first heart attack was essentially the same during the 1990s as it was during the 1960s. This came as something of a surprise. By giving people statin drugs to lower their cholesterol, doctors thought they were going to save people from having clogged arteries, which was supposed to automatically mean healthier hearts. It hasn’t turned out that way. And in another study, scientists involved in the Minnesota Heart Survey discovered in 2001 that although fewer hospital patients were being diagnosed with coronary heart disease, more patients were being diagnosed with heart-related chest pain. In fact, between 1985 and 1995 the rate of unstable angina had increased by 56 percent in men and by 30 percent in women.

The number of people with congestive heart failure has also continued steadily to rise.

Researchers at the Mayo Clinic searched two decades of their records and discovered that the incidence of heart failure was 8.3 percent higher during the period 1996-2000 than it had been during 1979-1984.

The true situation is much worse still. Those numbers reflect only people newly diagnosed with heart failure. The increase in the total number of people walking around with this condition is astonishing, and only a small part of the increase is due to the aging of the population. Doctors from Cook County Hospital, Loyola University Medical School, and the Centers for Disease Control examined patient records from a representative sample of American hospitals and found that the numbers of patients with a diagnosis of heart failure more than doubled between 1973 and 1986. A later, similar study by scientists at the Centers for Disease Control found that this trend had continued. The number of hospitalizations for heart failure tripled between 1979 and 2004, the age-adjusted rate doubled, and the greatest increase occurred in people under 65 years of age. A similar study of patients at Henry Ford Hospital in Detroit showed that the annual prevalence of congestive heart failure had almost quadrupled from 1989 to 1999.

Young people, as the 3,000 alarmed doctors who signed the Freiburger Appeal affirmed, are having heart attacks at an unprecedented rate. In the United States, as great a percentage of forty-year-olds today have cardiovascular disease as the percentage of seventy-year-olds that had cardiovascular disease in 1970. Close to one-quarter of Americans aged forty to forty-four today have some form of cardiovascular disease. And the stress on even younger hearts is not confined to athletes. In 2005, researchers at the Centers for Disease Control, surveying the health of adolescents and young adults, aged 15 to 34, found to their surprise that between 1989 and 1998 rates of sudden cardiac death in young men had risen 11 percent, and in young women had risen 30 percent, and that rates of mortality from enlarged heart, heart rhythm disturbances, pulmonary heart disease, and hypertensive heart disease had also increased in this young population.

In the twenty-first century this trend has continued. The number of heart attacks in Americans in their twenties rose by 20 percent between 1999 and 2006, and the mortality from all types of heart disease in this age group rose by one-third. In 2014, among patients between the ages of 35 and 74 who were hospitalized with heart attacks, one-third were below the age of 54.

Developing countries are no better off. They have already followed the developed countries down the primrose path of electrification, and they are following us even faster to the wholesale embrace of wireless technology. The consequences are inevitable. Heart disease was once unimportant in low-income nations. It is now the number one killer of human beings in every region of the world except one. Only in sub-Saharan Africa, in 2017, was heart disease still outranked by diseases of poverty—AIDS and pneumonia—as a cause of mortality.

In spite of the billions being spent on conquering heart disease, the medical community is still groping in the dark. It will not win this war so long as it fails to recognize that the main factor that has been causing this pandemic for a hundred and fifty years is the electrification of the world.

Wow! What a great work to put out, thank you.